AI in the eyes of the law

In 2023 artificial intelligence (AI) moved away from the realm of sci-fi and hit the mainstream, with people now using it at home and, increasingly, at work. Indeed, AI has been talked about so much recently that publisher Collins named it 2023’s word of the year. But, as with all new tools, we need to learn how to use AI properly, in a way that won’t cause unexpected problems down the track.

So, what do you need to know?

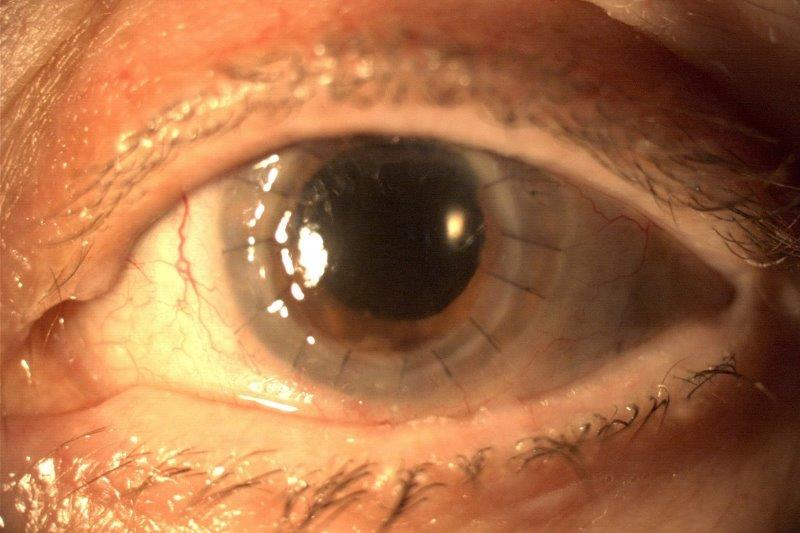

You may not realise it, but it’s highly likely you’re already using AI in various aspects of your work. AI is commonly found in HR tools used in businesses and in the chatbots popping up on websites. Health diagnostics has also been a leader in using AI technology, with early developments focusing on the diagnosis of retinal disorders, cataracts and glaucoma.

Using AI tools can be helpful, and in some cases necessary, to provide the best possible service to your customers. But it can also expose them to greater risks.

Privacy

One of the biggest concerns with AI is about the risk to privacy. The Privacy Act 2020 and the Health Information Privacy Code 2020 take the protection of individuals’ privacy very seriously. They require, among other things, that:

• People know when health information is being collected from them

• The information is collected directly from that person and not from other sources

• People are told of the purposes for which it will be used

• The information is not used for any other purposes (with limited exceptions, including permitting its use for statistical or research purposes, as long as the individual cannot be identified)

• The information is stored and disposed of securely

The use of AI tools can stretch the limits of these requirements. For example, relying on AI to make decisions and to draw connections between data involving personal information – such as when AI is used as a diagnostic tool - can produce more personal information than was originally input. This can result in ‘data proxying’, where although something is not expressly known about an individual, it can be implied or imputed based on other information known about them. This could breach the Privacy Act, either through the collection of information from sources beyond the individual, or by ending up with information where the individual can be identified when it was thought that they were anonymous.

Inaccurate results from AI are also a significant concern. ChatGPT and other types of generative AI go beyond working with information that already exists. Instead, they generate new information based on patterns in the information they have previously seen. This means generative AI is prone to what its developers call “hallucinations”, where instead of admitting that it doesn’t have an answer, facts are made up. The overall rate of ChatGPT hallucinations is between 15-20% but much higher in technical fields, including law and medicine. Although well-trained specific programmes can be very accurate (a recent study with AI reading mammograms found 20% more cancers than routine double reading by radiologists and didn’t increase false positives) the results from most AI tools are currently unreliable and need independent verification.

Finally, a lack of transparency with the technology can demonstrate real risks. Trust is a fundamental part of privacy protection and once undermined can be nearly impossible to regain. If organisations can’t account for how an AI system makes decisions about individuals or how their information generated a specific outcome, consumers may have issues trusting organisations to store their data.

Similarly, you might have done all the checks when you first started working with a business and signed up to their terms and conditions, but can you trust that nothing has changed? Successful businesses are often purchased and their terms and conditions altered. A popular genetic testing business 23andMe quickly established a DNA database of millions of people around the world. It subsequently received significant investment from a pharmaceutical company and is now connected with Virgin. Its data, once used to research ancestry, is now used to determine the likelihood of future illnesses, life expectancy and requirements for healthcare. This is not something the original customers would have expected when they signed up but should be considered by businesses needing to protect their customers’ information.

Complying with privacy requirements

Fortunately, complying with privacy requirements is relatively straightforward:

• Only hold the data you need

• Have systems in place to ensure that the data is only used for proper purposes and is securely disposed of when no longer needed

• If information is provided to a third party, ensure it will either not be retained at all, or will be destroyed after a minimal period of time, such as one month

• Where practicable, store and use information without details that can identify the person involved

A breach of your responsibilities under the Privacy Act can result in a prosecution in the Human Rights Review Tribunal, a damages award in favour of the person whose privacy was breached and a fine of up to $350,000.

If you have any questions about using AI, or about protecting the privacy of personal information, please contact Duncan Cotterill, where our employment law, health law, or data protection and privacy teams will be able to help you.

Jessie Lapthorne is a partner in Duncan Cotterill’s employment team. You can contact her on jessie.lapthorne@duncancotterill.com.